Lets Chat (GPT) about Artificial Intelligence Integration in the Classroom

Universities are in near states of hysteria about how to deal with artificial intelligence (AI) programs. It is the introduction of programs such as Chat GPT, which are capable of providing students with fully written assignments, without student learning occurring, that has raised alarm. Educators are navigating how to integrate, assess, and eliminate this tool. Faculty reactions ranged from adamantly demanding that all AI programs be banned to those advocating for determining how to best integrate their use into the classroom setting. In the history of innovation, banning the products of innovation has proven to consistently fail, resulting in the only viable alternative being how to integrate in a way that complements student learning.

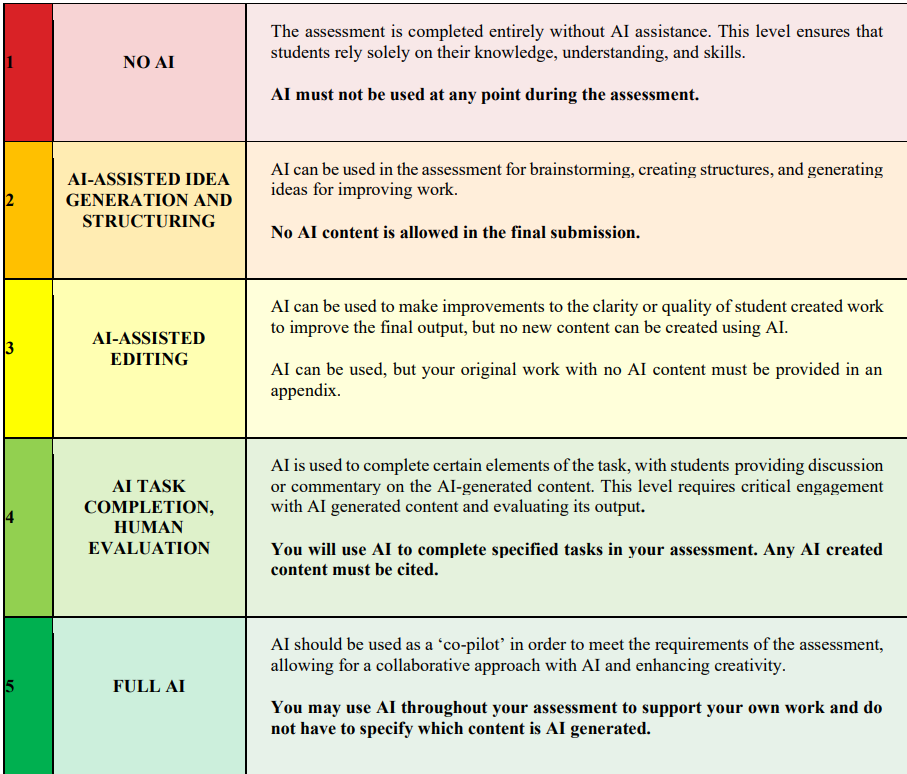

Like other institutions, University of Saskatchewan (U of S) faculty are grappling with the challenge of using AI in classroom learning and assignments. This case study focuses on the University of Saskatchewan’s initiative to explore the use of AI in a fourth-year Economics of Innovation student assignment. The assignment aimed to evaluate the advantages and limitations of AI, specifically Chat GPT, in academic tasks. In December 2023, the U of S provided faculty with a typology of AI, encouraging faculty to use the Winter Term from January to April 2024, to ‘experiment’ with AI and its use in classrooms and courses. The typology provided for use as a metric has five tiers and is known as the AI Assessment Scale (Figure 1).

Figure 1: AI Assessment Scale

During the January-April 2024 term, the fourth-year class on The Economics of Innovation that I teach was asked whether they would be willing to participate in an assignment relating to the use and assessment of Chat GPT. The students agreed the proposed assignment sounded interesting and were willing to see what I could develop. Rather than be evaluated on their ability to use the AI technology, they were instead tasked to determine if its use proved advantageous, and where it was able to succeed or fail within the limits of the assignment.

Assignment Details

The assignment tasked students to write a short essay (500-600 words) on “What are the top three factors for increasing crop productivity?” using the various levels of U of S’s AI typology. Students worked in pairs, with each group assigned to one of the five AI typologies. The guidelines varied, allowing different levels of AI-generated content in their essays. Students were also given a requirement of 5 paragraphs: an introduction, one paragraph on each of the 3 factors and a conclusion. Any references were to be added but didn’t count towards the word count. Two groups were randomly assigned to each AI typology.

Assignment Guidelines:

AI typology 1 was not allowed to use Chat GPT for the essay.

AI typology 2 was allowed to be make 1 AI-generated revision.

AI typology 3 was allowed to make 5 AI-generated revisions.

AI typology 4 was allowed to make 10 AI-generated revisions.

AI typology 5 was allowed unlimited AI-generated revisions.

Objective:

To gain insights and understanding into the advantages and limitations of using AI programs like Chat GPT for research and knowledge communications.

Assignment Assessment

To assess the student essays, the submissions were posted anonymously on the course webpage, with instructions they were to be reviewed and assessed by their class peers using a structured online metric (Table 1). Each student was responsible for reviewing the other nine essays to determine which of the five AI typologies was used in each essay and provide detailed justifications for their assessments.

Table 1: Essay assessment metric

| Essay structure | Your grade (1-10) for each category |

|---|---|

| Quality of introduction paragraph – how well does this relate to the objective of the assignment and provide a logical introduction to the rest of the text? | |

| Quality of first factor paragraph – does the paragraph provide details regarding how to increase crop productivity? Does the suggestion for increasing productivity seem logical? | |

| Quality of second factor paragraph – does the paragraph provide details regarding how to increase crop productivity? Does the suggestion for increasing productivity seem logical? | |

| Quality of third factor paragraph – does the paragraph provide details regarding how to increase crop productivity? Does the suggestion for increasing productivity seem logical? | |

| Quality of concluding paragraph – do the conclusions align with the context of the various factors? | |

| Overall readability/spelling errors, etc. | |

| Total | |

| Level of AI that you believe was applied (1-5) |

Upon evaluation of each essay, a text box was provided for students to explain how they determined the AI category that had been used to generate each essay. The student response needed to be descriptive, and include comments on what aspects (quality of the writing, word use, accuracy, statistics, believability) of the essay resulted in them making their decision.

Students were not marked based on the essay their group submitted, as that would offer a bias in marking. Nor were the student’s marks impacted by their peers’ evaluations. Their grade was based on whether the student contributed to preparing the essay, their review of the other nine essays and their statements of assessment. Thereby assessing students on how they interacted with the materials generated by Chat GPT and their peers, as well as how their interpretation of each essay reflected the briefing on the assignment question. This assignment was worth 25% of the total grade, which included three aspects:

- 5% for writing and submission of the assignment.

- 10% for conducting the essay reviews and providing assessments.

- 10% was my assessment of the explanation paragraphs.

The Results

In the original briefing of the assignment, students were required to write about the three factors most important for increasing crop productivity. For groups writing using AI typologies 3-5, the three factors were identical: technological, environmental (sustainability improvement) and biological (crop genetics). Whereas the groups using limited AI had a variance in these factors. The results of the peer evaluation indicated that students were most successful in identifying essays without AI (typology 1) compared to those with varying degrees of AI use (typologies 2-5). Interestingly, essays with higher AI involvement were perceived as more generic, lacking detailed language and specific domain knowledge.

The essays the students rated as being typology 1 or 2 contained greater use of more detailed language, such as the use of statistics to support the arguments (Table 2). They also noted that the more AI applied, the greater the use of words that fourth-year agricultural students would never use in their writing.

Table 2: Student Essay Review

| Typology | Correct assessment (%age) | Range of typology estimates | Avg. of student essay review marks |

|---|---|---|---|

| 1 | 59% | 1-5 | 81% |

| 1 | 67% | 1-5 | 69% |

| 2 | 24% | 1-5 | 71% |

| 2 | 33% | 1-5 | 82% |

| 3 | 18% | 1-5 (no #3) | 79% |

| 3 | 29% | 1-5 | 69% |

| 4 | 35% | 1-5 | 80% |

| 4 | 18% | 1-5 | 79% |

| 5 | 33% | 1-4 | 80% |

| 5 | 25% | 2-5 | 76% |

Object Success

The objective was to help students who will be entering the workforce within a few months to better understand the abilities and limitations of AI programs. These individuals are going into their professional lives, in which AI will be readily available. The intent was to help student evaluate if they use AI like Chat GPT, what are its benefits and limitations, and how does it impact their own voice and understanding. Student comments about the experience after the assignment was completed observed that it is important for them to have a good grasp of the subject matter to be able to remove the AI-generated content that is not accurate or pertinent to their topic. They realized that fully relying on AI to generate accurate text is not a sound strategy for using it. This learning outcome indicates that the objective of the assignment was achieved.

The case study highlights the nuanced impact of AI on student assignments. While AI can automate tasks, it also raises questions about content quality and student learning. Integrating AI into education requires thoughtful design and consideration of its role in enhancing rather than replacing human expertise.

Key Findings

- AI Influence on Content: Students noted that AI-generated content tended to be generic and less domain-specific, leading to concerns about accuracy and relevance.

- Student Learning: The assignment helped students recognize the limitations of AI and the importance of human expertise in refining and contextualizing AI-generated content.

- Educational Impact: Integrating AI into assignments can enhance awareness of AI capabilities and limitations among students, preparing them for professional settings where AI is increasingly utilized.